🧧 Lunar New Year 🌙 $500 OFF 🏮

🐎 Plus Bonus EPOS Gaming Headset ✨

w/ any custom or prebuilt PC order. Promo code LNY26

Categories

AudioCPU

General

Graphics Card

Maintenance

Monitor

RAM (Memory)

Sustainability

Use Cases

About Evatech

Since 2013, Evatech Computers, a fully Australian-owned and operated company, has provided custom-built gaming, workstation, and home + office PCs, meticulously tailored to individual client needs and budgets.

Shop

Custom Gaming PCs

Custom Workstations

Pre-built PCs

Monitors

Mice

Keyboards

Headsets & Microphones

Configuring a PC for Artificial Intelligence / Machine Learning

Published 31st Jul 2023, updated 5th Feb 2026 - 8 minute readCPU (Processor)

- Which CPU to pick for AI/ML?

- Do more CPU cores help in AI/ML?

- What about consumer grade CPUs?

RAM (Memory)

- How much RAM does AI/ML need?

GPU (Video/Graphics Card)

- Which GPU to pick for AI/ML?

- How much VRAM (GPU memory) does AI/ML need?

- Will multiple GPUs improve performance in AI/ML?

What storage drive(s) should I use for AI/ML?

There are many Machine Learning (ML) and Artificial Intelligence (AI) applications ranging from traditional regression models, non-neural network classifiers, and statistical models represented by capabilities in Python's scikit-learn and the R programming language, through to Deep Learning (DL) models using frameworks like PyTorch and TensorFlow. Since there can be a lot of variety when it comes to AI/ML models, the hardware recommendations will follow standard patterns, however your specific application may have different optimal requirements. This will focus on ML/DL hardware recommendations for programming model training rather than inference.

CPU (Processor)

In most cases, GPU acceleration dominates performance for AI/ML. Even still, the CPU & motherboard serve as the basis to support the GPU. The CPU can also be utilised to perform data analysis and clean up to prepare for training on the GPU. Also, the CPU can be used as a compute engine for instances where the GPU's onboard memory (VRAM) is not sufficient.

Which CPU to pick for AI/ML?

The two main platforms to consider are Intel's Xeon W and AMD's Threadripper Pro – both offering exceptional reliability, can supply the abundant PCI-Express lanes for multiple GPUs, and can support huge RAM capacities with ease. We recommend a single-socket CPU workstation to lower memory mapping issues.

Do more CPU cores help in AI/ML?

The choice of CPU core count will depend on the expected load for non-GPU tasks and as a rule of thumb, you will want at least 4 cores for each GPU accelerator. However, if your workload has a significant GPU compute component then 32 or even 64 cores could be ideal. In most cases though, a 16 core CPU would be considered minimal.

What about consumer grade CPUs?

The important consideration behind recommending Xeon W or Threadripper Pro is for the number of PCI-Express lanes the CPUs can support, equating to how many GPUs can be used. These platforms have enough PCIe lanes for three or four GPUs (depending on motherboard layout, chassis space, and power supply). These platforms also support 8 memory slots, allowing for more than the 128GB or 196GB limitations that consumer platforms have with just their 4 memory slots. Lastly is the fact these platforms are considered enterprise grade so are more likely to be comfortable under heavy sustained loads.

If, after reviewing the considerations for RAM & GPU below, you find that you can squeeze into a consumer grade platform - fantastic! It will be a great cost saver.

As for the choice between AMD and Intel, it will more than likely come down to a matter of your personal preference. Although, Intel may be preferable if your workflow can leverage Intel's oneAPI.

RAM (Memory)

RAM performance and capacity requirements are dependent on the tasks being run but can be a very important consideration and there are some minimal recommendations.

How much RAM does AI/ML need?

You will want to have double the RAM as there is total GPU memory in your system. This means for a system with 2x Nvidia GeForce RTX 5090 GPUs (64GB total VRAM) you will want at least 128GB of system RAM.

The other thing to consider is how much data analysis is needed. It is often the case that you will want to be able to pull a full data set into memory for processing and statistical work. This can mean large memory requirements, as much as 1TB (or sometimes more) of system RAM. This plays into why we pitch enterprise grade platforms that can house large capacities of RAM over the consumer platforms.

GPU (Video/Graphics Card)

GPU acceleration has been the driving force unlocking rapid advancements in artificial intelligence & machine learning research since the mid-2010s. GPUs have significant performance improvements for deep learning training over CPUs.

Which GPU to pick for AI/ML?

Nvidia is dominating the field for GPU compute acceleration and is therefore is the unrivalled choice.

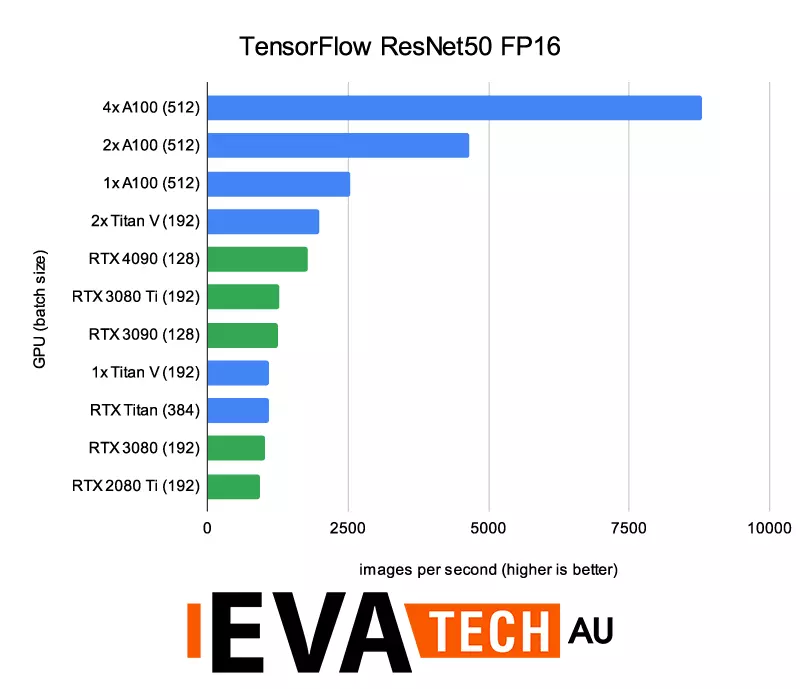

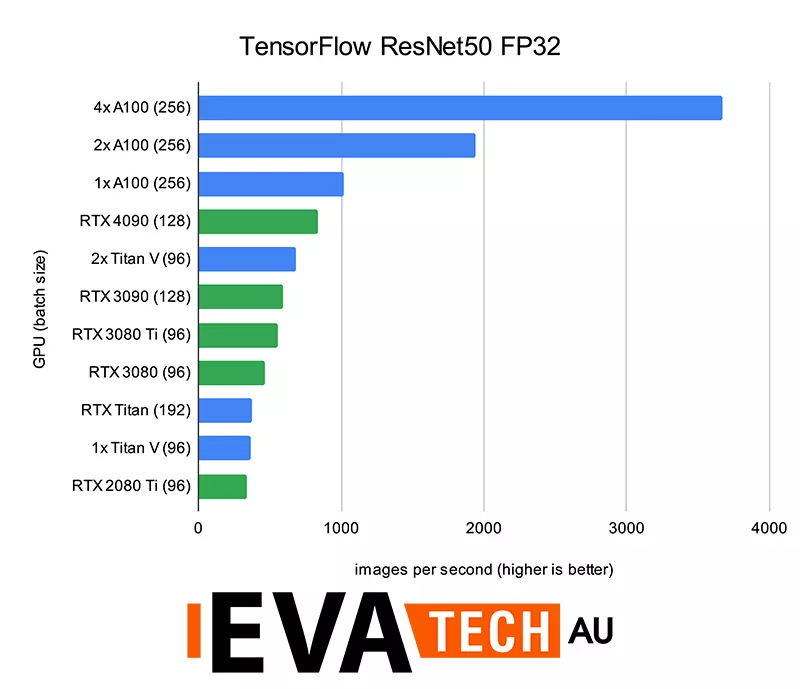

Almost any Nvidia GPU will work, modern and high-end models specifically are a good choice as they often represent better performance, especially when power consumption is taken into consideration. Thankfully most AI/ML applications that have GPU acceleration work well with single precision (FP32). In many cases, using Tensor cores (FP16) with mixed precision provides enough accuracy for deep learning model training and offers significant performance gains over the standard FP32. Most of the recent Nvidia GPUs have this capability, except lower-end cards.

Consumer grade graphics cards like Nvidia's GeForce RTX 5080 & 5090 offer great performance, but can be difficult to fit into a system with more than two GPUs because of their vast physical size. The professional grade GPUs like Nvidia's RTX A5000 & A6000 are stellar options which come with greater VRAM capacities, and are designed to work in multi-GPU configurations.

How much VRAM (GPU memory) does AI/ML need?

This is largely dependent on the "feature space" of the model training. 8GB per GPU is considered minimal as it could certainly be a limiting factor in lots of applications. 12GB-24GB is common and readily available on high-end GPU options. For larger data problems, the RTX A6000, with its 48GB of VRAM, is recommended for work with data that has "large feature size" such as higher resolution images, 3D images, etc. – but not commonly needed.

Will multiple GPUs improve performance in AI/ML?

Generally, yes. Most of our customers tend to start off with one GPU with the idea of adding more in as they go. An important consideration to allow this is ensuring you have enough power via the system's power supply, enough physical space within the system, with accommodations for cooling, and that the motherboard can support it.

It is worth remembering that a single GPU like Nvidia's RTX 5090 or A5000 provides significant performance by itself which may be enough for your application.

What storage drive(s) should I use for AI/ML?

With the CPU, RAM, & GPU often taking so much of the focus when it comes to configuring a PC for AI/ML, the storage configuration can get left out, or is an afterthought when most of the budget is already spent... this can be a huge and costly mistake! If the storage is not able to keep up with the CPU, RAM, & GPU - it will create a bottleneck and then it will not matter how fast or capable other hardware is.

There are a few main types of storage options on offer today, and they all have their pros and cons, so it makes sense to know & understand them, and often use a combination of storage drives in an AI/ML PC.

- SATA HDDs: Traditional spinning hard disk drives, the one that most people are likely familiar with already. While these the slowest storage option we offer, they are extremely affordable compared to the SSD counterparts, and also come in much larger capacities than SSDs can. They make for very good long-term storage solutions, for archival purposes, etc. but in most instances are not ideal to work off directly. Speeds max out at around 150MB/s.

- SATA SSDs: Operating on the same protocol as the above HDDs (SATA) these Solid-State Drives contain no moving parts and are multiple times faster than HDDs, but are more costly for the benefit. These max out around the 540MB/s range.

- NVMe M.2 SSDs: These currently come in two flavours on our website. As it stands we have Gen4 drives, and Gen5 drives. Gen4 NVMe M.2 SSDs generally top out at around 7,000MB/s while the Gen5 NVMe M.2 SSD drives start in the 9,xxxMB/s range and go up to 14,000MB/s.

These M.2 drives also connect directly to the motherboard (which may limit the amount of M.2 drives you can have in total as some may only support 2-3) which frees up the case/chassis drive bays for future additions that you may want.

Don't forget, it's important that you are in control of your data backups at all times!

For professional AI/ML work, we encourage a two-drive setup with the capacity and type of each depending on your desired budget and performance level.

- OS & Applications (SATA SSD or NVMe M.2 SSD) - Should be large enough to house your operating system plus any other applications you require as part of your workflow (eg: 500GB+). An SSD of either type will allow the OS and programs start up fast, which makes the overall operation of the system feel snappy.

- Project files (SATA SSD or NVMe M.2 SSD) - Having your projects on their own drive ensures that in case your primary drive has an issue that requires an OS reinstall you won't need to worry about also losing the projects you need to work on. Opening & saving to an SSD will be multiple times faster than a HDD too! Using either an SSD of either type for your project files, with the decision coming down to your budget.

- Optional (SATA SSD or HDD) - If data hoarding is your thing, it will likely be necessary to have additional drives. These can go towards organisation of your work, backup, archiving, etc. so at your discretion, and according to budget constraints, you can safely pick either an SSD or HDD.

External drives are a cause for concern as they are known to contribute to performance and stability issues. External drives should therefore only be used to move data to the PC - then work on it - and move it back onto an external drive if needed, but you should not be working directly from an external drive.

Something still not right with your Evatech PC? We're standing by and our support team can assist you!

Contact Evatech SupportIf this page didn't solve your problem, there's many more to view, and they're all very informative.

Evatech Help Docs

5/2 Fiveways Boulevarde, 3173 VIC

5/2 Fiveways Boulevarde, 3173 VIC Monday - Friday 10am-6pm

Monday - Friday 10am-6pm +61 (03) 9020 7017

+61 (03) 9020 7017 ABN 83162049596

ABN 83162049596 Evatech Pty Ltd

Evatech Pty Ltd